Building a platformer has been something of a holy grail for me since I first started tinkering with Unity. I grew up playing NES games like Super Mario Bros., so in some ways platformers are what I think of when I think of video games.

It’s not hard to find third-party utilities to help with this, depending on what level of functionality you want and what price you’re willing to pay.

Eventually, however, you’re going to have to bite the bullet and learn what’s going on inside of the black box. Either your third-party script’s settings are just plain confusing, or you want it to interact with the world in a way it doesn’t intend. (For me, it was trying to build Castlevania II-style stairs.) Even if you don’t roll your own, digging into the guts of a platforming script isn’t exactly straightforward.

The Basics

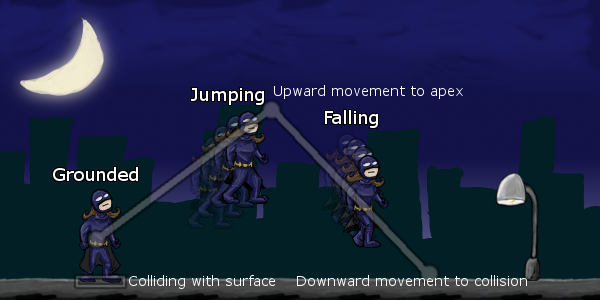

A basic platformer is going to have three mutually exclusive states at a minimum: Grounded, Jumping, and Falling.

Grounded is what the user perceives as the “default” state. In reality, it’s the state that the character is in only when its lower edge is colliding with a surface. Typically, a player can only jump when they’re in a grounded state (or, in the case of double jumping, the jump limit would be reset as soon as the character is grounded again).

Grounded is what the user perceives as the “default” state. In reality, it’s the state that the character is in only when its lower edge is colliding with a surface. Typically, a player can only jump when they’re in a grounded state (or, in the case of double jumping, the jump limit would be reset as soon as the character is grounded again).

Jumping is the state where a character is moving upwards into the air. It’s an active state–that is, it’s usually triggered by player or AI intervention, not simply interaction with environment like Grounded or Falling. It’s also a temporary, limited state which ends when a maximum height is reached or a timer is up.

Jumping can also end abruptly due to collision. If the character hits their head–that is, their upper edge collides with a surface, the opposite of the check done for grounded–it’s naturally expected that the player immediately start falling.

In games where the player has more control over their character, jumping can end early if the player releases the jump button. This allows for both quick, short jumps and long, floatier jumps.

Falling is actually the character’s “default” state–when they’re neither in a jump nor colliding with the ground. When falling, the character is affected by gravity. Falling ends when the character becomes grounded again, based on collisions with their lower edge.

Thinking in frames

While it may not seem obvious, there are only a few possible state transitions in a typical platformer. You can’t go directly from Jumping to Grounded without encountering a case that would trigger Falling. You can’t go from Falling to Jumping without first encountering a case that would trigger Grounded. Because you can rule out certain state transitions, you can simplify your logic.

I say this isn’t obvious because I’ve never been a hardcore twitch-gamer who can pick out specific frames of animation. To me, playing a Mario Bros. game is a smooth experience–I take in the “big picture,” so to speak. In reality, it’s a series of very tiny moments where the computer is performing very tiny updates and then making decisions based upon them.

This is tricky because it’s easy to work out a “good enough” platformer controller that fails in odd situations if you don’t consider this frame-to-frame precision. For example, 99% of the time the character might behave correctly–but if you end up off by just a fraction, you’ll get weird behaviors. Precision is possible–you just have to figure out how to make it work. One tip is to be aware of Unity’s lifecycle.

Testing for state changes

So how do we detect when the player changes states?

As a Unity newbie, my first attempted solution was to detect collisions with the ground or other platforms using colliders. This isn’t a bad instinct. Colliders take the hard work out of hit detection by turning them into events. You don’t have to constantly check for a state–you simply need to respond to enter, stay, and exit events.

For detecting Grounded/Falling states, this can actually be a pain. Each collision is handled as a discrete event, so you don’t know if that OnCollisionExit event means you’re actually grounded or not because that collider doesn’t know whether other colliders are triggering OnCollisionStay and OnCollisionEnter events. Technically, it should be possible to track this, but that’s a lot of bookkeeping, and it’s highly dependent upon the order the events are called in (relative to the Unity lifecycle).

For detecting Grounded/Falling states, this can actually be a pain. Each collision is handled as a discrete event, so you don’t know if that OnCollisionExit event means you’re actually grounded or not because that collider doesn’t know whether other colliders are triggering OnCollisionStay and OnCollisionEnter events. Technically, it should be possible to track this, but that’s a lot of bookkeeping, and it’s highly dependent upon the order the events are called in (relative to the Unity lifecycle).

Not to mention that it’s hard to get an accurate picture of which direction a collision came from without multiple colliders. That means it’s not easy to differentiate running into a wall (or an enemy) from hitting the ground. Believe me, I’ve tried testing the collision relative velocity, and I’ve never felt like I could rule out all the false positives.

Raycasting

Unity has various physics casting functions (some which, I suppose, aren’t technically raycasting) that all do variants of the same thing: they test a specified area for colliders.

Currently, I tend to lean on Physics2D.OverlapCircleAll, which returns all colliders within a given radius of a point. It returns a set of Collider2D objects which can be used to determine whether any ground layers are hit.

Currently, I tend to lean on Physics2D.OverlapCircleAll, which returns all colliders within a given radius of a point. It returns a set of Collider2D objects which can be used to determine whether any ground layers are hit.

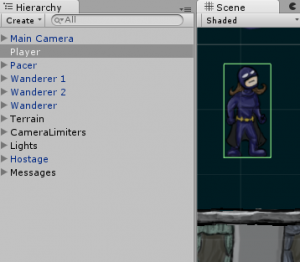

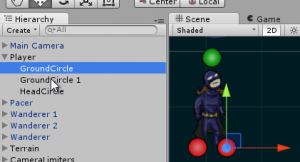

I attach a couple of empty GameObjects as test points, which I then pass into my controller script. For example, in the screenshot here I use two ground and one head (highlighted with red and green icons respectively). Add as many as you need based on the size of your cast area–you want to avoid any case where a character could technically be over a walkable object without returning a raycast hit.

In my last Ludum Dare game, I used something like this:

var colliders = Physics2D.OverlapCircleAll(transform.position, 0.1f,

LayerMask.GetMask("Terrain"));

// Test colliders to see if there are any ground objects

You call this each Update or FixedUpdate, depending on how you’re adjusting your physics. Test for grounded (or whether a character hits their head in a jump) before you do anything else, because it’s going to determine what state transitions you allow, what movement you apply to the character, and what you allow the player or AI to do. (That is, the character must react to the environment before they can react to the player or AI’s input.)

Let me give a disclaimer: I really need to work out a better approach. I suspect there’s some issues with my circular cast not lining up perfectly with my character’s box collider, and there’s probably a more performant approach than returning all collisions. Still, this should be a good starting point, although I encourage you to try out some of the other methods in Physics2D (or Physics, if you’re using 3D colliders) that may work better for your particular setup.

Admittedly, tinkering is difficult. This stuff is happening at such small intervals, sometimes at varied points in the Unity lifecycle (Update vs. FixedUpdate vs. LateUpdate vs. coroutines). And you can’t easily visualize it without writing your own editor gizmo code. As a newbie, I’ve found there’s a certain level of blind trust (sometimes misplaced) involved.

This is also a case where setting up your physics layers matters: if you do it right, you should minimize the amount of “testing” you have to do with the results.

Note that while I’m using raycasting to determine the player’s overall state, I’m also relying heavily on Unity’s physics and hit detection to handle movement. There’s still a collider on my player character and it still blocks movement when it comes into contact with something.

I use OverlapCircle because I don’t really care how far the character is from the ground, only that it’s near enough to a surface to register as touching. If I didn’t have a collider attached to the character, I’d want to know exactly how far the closest ground object was so that I could adjust falling speed so as not to overshoot.

Speaking of which, that brings us to…

Movement

There are a couple of ways you can handle movement in Unity. For the most part, you should technically be able to get similar results with any of these, assuming you can figure out how.

The approach you take determines how much of Unity’s built-in features you can use (versus how much you have to write yourself) and what mode you’ll have to think in.

You can set an object’s transform position directly. This is sort of a brute-force approach. Triggers and colliders will still fire, but you don’t have the benefit of continuous collision mode, unless you code it yourself. In mathematical terms, this means you have to think about position at a given time, or f(x). (Realistically, you’ll probably be working out speed/velocity, or f'(x), and applying it.)

You can set a Rigidbody’s velocity directly. This is my preferred approach, because it means I don’t have to think too much about physics, but I still get the benefit of the physics system adjusting for collisions. In mathematical terms, this means you’re only working with the player’s velocity at a given time, or f'(x).

Typically, I set Rigidbody velocity in FixedUpdate as long as there’s a change in velocity required from the last FixedUpdate call. I set drag and gravity scale to 0, because I don’t want anything else tinkering with velocity if I can help it. Because Unity velocity represents units per second, it’s possible to use Time.fixedDeltaTime to work out just what velocity needs to be.

Note that one reason I like using Unity’s built-in physics and hit detection is I have to think less about exact collision positions. I’ve seen platformer controllers that don’t require colliders, but that means you have to think about not just the character’s current speed, but the distance to the nearest object. This isn’t impossible, but it’s an extra layer of complexity I’d prefer to get into only if necessary.

You can apply force to a Rigidbody. This takes full advantage of Unity’s physics, but it requires a little more work. You’re not only going to have to determine what force you will apply, but you’ll have to tweak the object’s gravity scale, mass, and drag to get it feeling right. In mathematical terms, you’re indirectly working with acceleration, or f”(x).

I’d avoid this on the player character, because you want those controls to feel tight, which is harder to figure out with several degrees of separation between your code and the character’s actual movement speed.

In a later post, I’ll get into more of the math behind movement, since that’s the difference between a clunky game and a smooth one. (I will say that I find Microsoft Mathematics incredibly useful for this sort of thing, since you can easily plot changes in speed or position over time.)